Training own DNN for Deep Dream

by tsulej

Originaly posted on FB Deep Dream // Tutorials group under this link: https://www.facebook.com/groups/733099406836193/permalink/748784328601034/ A little bit messy. Give me an info if something is unclear or bullshit.

Ok, so, you’re bored, have spare time, have working caffe on gpu and want to try train network to get rid of dogs in deep dream images… Here is tutorial for you. In points.

- Forget about training from the scratch, only fine tune on googlenet. Building net from the scratch requires time, a lot of time, hundreds of hours… Read it first: http://caffe.berkeleyvision.org/gathered/examples/finetune_flickr_style.html.

- The hardest part: download 200-1000 images you want to use for training. I found that one type of images work well. Faces, porn, letters, animals, guns, etc.

- Resize all images into dimension of 256×256. Save it as truecolor jpgs (not grayscale, even if they are grayscale)

- OPTION: Calculate average color values of all your images. You need to know what is average value of red, green and blue of your set. I use command line tools convert and identify from ImageMagick: convert *.jpg -average res.png; identify -verbose res.png to see ‘mean’ for every channel.

- Create folder <caffe_path>/models/MYNET <- this will be your working folder. All folders and files you’ll create will be placed in MYNET

- Create folder named ‘images’ (in your working folder, MYNET)

- For every image you have create separate folder in ‘images’. I use numbers starting from 0. For example ‘images/0/firstimage.jpg’, ‘images/1/secondimage.jpg’, etc… Every folder is a category. So you end up with several folders with single image inside.

- Create text file called train.txt (and put it to the working folder). Every line of this file should be relative path of the image with the number of the image category. It looks like this:

images/0/firstimage.jpg 0

images/1/secondimage.jpg 1

… - Copy train.txt into val.txt file

- Copy deploy.prototxt, train_val.prototxt and solver.prototxt into working folder from this link: https://gist.github.com/tsulej/ff2b3e37aa76e8fbb244

- Next you need to edit all files, let’s start.

- train_val.prototxt:

- lines 13-15 (and 34-36) define mean values for your image set. For blue, green and red channels respectively (mind the reverse order). If you don’t know them just set all to 129

- line 19, define number of images processed at once. 40 works well on 4gb GPU. You need probably change it to 20 for 2gb gpu. You’ll get out of memory error if number is too high, then just lower it. You can set it to 1 as well.

- lines 917, 1680 and 2393. num_output should be set to number of your categories (number of your folders in image folder)

- deploy.prototxt:

- line 2141, num_output as above

- solver.prototxt, what is important below:

- display: 20 – print statistics every 20 iterations

- base_lr: 0.0005 – learning rate, it’s a subject to change. You’ll be observing loss value and adapt base_lr regarding results (see strategy below)

- max_iter: 200000 – how many iterations for training, you can put here even million.

- snapshot: 5000 – how often create snapshot and network file (here, every 5000 iterations). It’s important if you want to break training in the middle.

- Almost ready to train… but you need googlenet yet. Go to caffe/models/bvlc_googlenet and download this file (save it here) http://dl.caffe.berkeleyvision.org/bvlc_googlenet.caffemodel

- Really ready to train. Go to the working folder and run this command:

../../build/tools/caffe train -solver ./solver.prototxt -weights ../bvlc_googlenet/bvlc_googlenet.caffemodel

It should work. Every 5000 iterations you’ll get snapshot. You can break training then and run deepdream on your net. First results should be visible on inception_5b/output layer.

To restart training use a snapshot running this command:

../../build/tools/caffe train -solver ./solver.prototxt -snapshot ./MYNET_iter_5000.solverstate

Strategy for base_lr in solver for first 1000 iterations. Observe loss value. During the training it should be in average lower and lower and go towards 0.0. But:

- if you see ‘loss’ value during training is higher and higher – break and set base_lr 5 times less than current

- if you see ‘loss’ stuck at near some value and lowers but very slowly – break and set base_lr 5 times more than current

- if none of above strategy works – probably you have troubles and train failed. Change image set and start over.

Some insights:

- My GPU is NVIDIA GTX960 with 4gb RAM. Caffe is compiled with CuDNN library. Every 5k iterations take about 1 hour. On CPU calculations were 40 times slower.

- I usually stop after 40k of iterations. But did also 90k. DeepDickDream guy made 750k iterations to have dicks on Hulk Hogan (http://deepdickdreams.tumblr.com/)

- You have little chance to get your image set visible in deepdreams images before 100k of iterations. But you have high probability to see something new and no dogs

- Fine tune method means that you copy almost all information into your net from existing net except the layers called “classification” (three of them). To achieve this just rename layer name. You may decide and try not to copy other layers (eg. all inception_5b) to clean up more information. I have good and bad results using this method. To do this just change name of such layer.

- You can put more images into one category. You can use 20 categories with 200 images in it. You can use random images, same images, similar images, whatever you want. I couldn’t find a rule to get best results. It depends mostly on content I suppose.

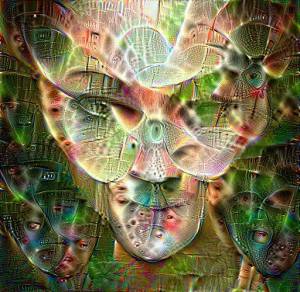

Examples:

Net trained on british library images (https://www.flickr.com/photos/britishlibrary/albums ). 11 categories. 100k images total (every image had 10 variants). inception layer 3, 4 and 5 were cleaned up. Result after 25k iterations. Faces from portrait album are clearly visible. This was my first and best try (among 20). Image from 5b/output layer.

Only letters album from british library images. 750 categories with one image in category. 40k iteration. Only classification layers cleaned up. Butterflies visible, but why? Layer 5b.

Same as above. 5a layer. Hourglasses.

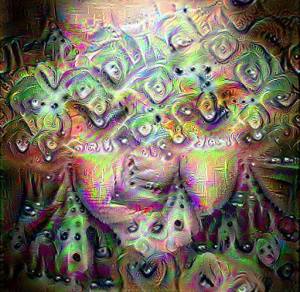

94 categories, 100 images each, porn image set. 90k iterations. default net set (only classification). Layer 5b.

As above but differently prepared image set (flip/flop, rotations, normalization/equalization, blur/unsharp, etc.). 40k iterations. Layer 5b.

Caffenet, built from scratch on 4 categories, 1000 glitch images each. 65k iterations. Pool5 layer.

Same image set as above. 80k iterations. googlenet with cleaned up 3, 4 and 5 layers. 5b layer.

Any questions? Comment it or write generateme.blog@gmail.com

Do you know of any way to automatically copy the multiple images into their respective folders?

I have images 0001.jpg – 1022.jpg and am trying to automate copying into folders 0 – 1021. I’ve found some examples using seq, but so far I’ve had no luck getting them to work, and I’m not getting any error output so I’m not sure where I’m going wrong.

LikeLiked by 1 person

I use bash scripting to automate such operations (cygwin under windows, terminal under macos/linux). Here is oneliner:

a=0; for i in *.jpg; do mkdir -p $a; mv $i $a; a=$((a+1)); done

LikeLike

Oh, thanks, I found some help elsewhere before I saw that you replied.

LikeLiked by 1 person

nevermind, I got it.

LikeLike

i write some code for help its make folder for every file and display log of all dir like images/0/0.jpg 0 etc and display mean of all img added together

import os, random, sys

import numpy as np

import cv2

import scipy.misc

NUM_IMAGES = int(input(‘img number?’))

IMAGE_W = 224

IMAGE_H = 224

IMAGE_DIR = ‘PICTURES’

NUM_CHANNELS = 3

i=0

j=0

alle = [0,0,0]

for root, subdirs, files in os.walk(IMAGE_DIR):

for file in files:

copy = file

path = root + “\\” + file

create = root + “\\”+ str(j)+”\\”

if os.path.exists(create) == False:

os.makedirs(create)

path2 = root + “\\”+ str(j)+”\\” + copy

if not (path.endswith(‘.jpg’) or path.endswith(‘.png’)):

continue

img = cv2.imread(path)

img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB) # hash here if u want bgr as orginal and swap chanel in your deepdreamer

img = cv2.resize(img, (IMAGE_W , IMAGE_H))

alle += img

scipy.misc.toimage(img, cmin=0.0, cmax=255.0).save(path2)

pathtosave = ‘images’ + “/”+ str(j)+”/” + copy + ‘ ‘ + str(i)

print(pathtosave)

i=i+1

if i % 1 == 0:

j = j + 1

scipy.misc.toimage((alle/i), cmin=0.0, cmax=255.0).save(‘.\men.jpg’)

np.mean((alle/i), axis=(0,1))

LikeLike

Is there anyway to get this to output the accuracy as it’s running? I noticed on the caffe site it shows accuracy being shown along with loss.

LikeLiked by 1 person

Try to remove these lines from accuracy layers in train_val.prototxt.

include {

phase: TEST

}

It should work (haven’t tested).

LikeLike

Thanks, I commented out those lines, this is how the output looks now.

Train net output #0: loss1/loss1 = 0.0101866 (* 0.3 = 0.00305598 loss)

Train net output #1: loss1/top-1 = 1

Train net output #2: loss1/top-5 = 1

Train net output #3: loss2/loss1 = 0.0140079 (* 0.3 = 0.00420237 loss)

Train net output #4: loss2/top-1 = 1

Train net output #5: loss2/top-5 = 1

Train net output #6: loss3/loss3 = 0.0252537 (* 1 = 0.0252537 loss)

Train net output #7: loss3/top-1 = 1

LikeLiked by 1 person

I forgot it only is set to test every 10000 iterations

LikeLike

Still nothing for accuracy after the next 10000 iterations. “solver.cpp:281] Iteration 50000, Testing net (#0)” That line is always blank instead of showing accuracy. I think I must have something wrong.

LikeLike

So probably I cannot help. Is it more important than loss? It probably measures accuracy of classification on test set. And may be that for train set accuracy will be always 1. I think loss is real measure for net train amount.

LikeLike

Ok, thanks anyway. I thought it might be important, because I saw posts online with people saying their loss was going down, but accuracy was not going up, so I was wondering if everything was actually working right.

I’ve been running it remotely and haven’t been home in a couple of days, but I’ll be able to test it in deepdream tonight after work. Thanks for your help.

LikeLiked by 1 person

Share with results necessarily!

LikeLike

I have tried joining your facebook group but have not been accepted yet. I do have another question though. I tried running an image through deepdream at 85000 iterations and “dogslug” is mostly good, but it doesn’t appear that my images are showing yet. I tried again at 12500 images, and it still looks about the same. On my training, since it got below 1 loss, it has been going up and down from (example) .1xxxxxxx to .000xxxxxx does it mean that it’s not learning any more if the loss is still not going down after this many itterations, or do I just need to let it keep going?

LikeLike

If you have FB account, catch me there. It will be easier to speak using fb messanger. My account: https://www.facebook.com/tsulej1

Regarding group, I’m not an admin there so I can’t approve access (I messaged to admin to do it).

If you have loss varying from 0.1 to .0001 – something is wrong. In such case I got nothing. I don’t know what your image set contains. But I observed that when I used sharp edged letters I had a lot of problems with learning stabilisation. Probably such images are completely different than used for training so network is unable to rebuild.

LikeLike

Reblogged this on Howl.

LikeLike

Hi there 🙂 I’m new to neural networks and deep learning, but I have already set everything up, to make deep dreams on windows with anaconda via gpu etc. I have done everything you wrote in this tutorial, but i have problem with last step.

../../build/tools/caffe train -solver ./solver.prototxt -weights ../bvlc_googlenet/bvlc_googlenet.caffemodel

anaconda prompt says: “..” is note recognized as internal or external command, operable program or batch file.

On the facebook group, someone had similar problem, so I tried to change the path to D:/blah/something/anaconda2/caffe/tools/caffe train -solver ./solver.prototxt -weights ../bvlc_googlenet/bvlc_googlenet.caffemodel

but the same error appeared.

LikeLike

try to use backslash ‘\’ or double backslash ‘\\’ instead of slash ‘/’

LikeLike

Hi there, I am trying to use transfer learning as you described above but when my class count is different from googlenet network (they used 1k classes and I have 450) caffe complains that there is a mismatch. Do I have to have same number of classes for trained network and the new network. You mentioned using different classes, curious did you face this issue?

Thanks

LikeLike

As far as I remember I used different classes for newly created network and trained from the scratch. For fine tune method structure of the network can’t be changed.

LikeLike

Is loss supposed to stay around 0.01-0.001 or is it supposed to go towards 0? when I start training it starts from about 10 and quickly goes to 0.01 and stays there. I have used 2 different imagesets and tried different learning rates.

LikeLike

should be lower and lower, but ability of learn in networks is limited and in some conditions it stucks. Try to manipulate base_lr parameter (lower/higher).

LikeLike

Hi there. Nice tutorial, I used to use them on my Ubuntu PC. Now I’m trying to accomplish the same thing using a Windows 10 OS.

Now, my setup is Anaconda2 with Caffe using this tutorial.

http://thirdeyesqueegee.com/deepdream/2015/07/19/running-googles-deep-dream-on-windows-with-or-without-cuda-the-easy-way/

As the tutorial describes they pick a CUDA Caffe version.

https://github.com/yang1fan2/nlpcaffe-windows/blob/master/docs/tutorial/interfaces.md

As I imagine, is possible to train using the Jupyter or iPython, but do you know how to make it?

../../build/tools/caffe train -solver ./solver.prototxt -weights ../bvlc_googlenet/bvlc_googlenet.caffemodel

Is implicit the trainning follow the folders?

The problem is that I cannot make the cmdcaffe to work, so I cannot debug what exactly happens in this process.

LikeLike

I can’t help you here. Never got run caffe on windows.

LikeLike

Thanks for this tuto. works on windows 10 with CUDA 8

LikeLike

[…] Image source: https://generateme.wordpress.com/2015/08/12/training-own-dnn-for-deep-dream/ […]

LikeLike

# Fast Style Transfer in [ML5](https://github.com/ITPNYU/ml5-js)

This is a demo of Fast Style Transfer in [ml5](https://github.com/ITPNYU/ml5-js)

Check it out here: [https://yining1023.github.io/fast_style_transfer_in_ML5](https://yining1023.github.io/fast_style_transfer_in_ML5)

Credits:

The code and models are based on [deeplearn.js demo](https://github.com/PAIR-code/deeplearnjs/tree/0608feadbd897bca6ec7abf3340515fe5f2de1c2/demos/fast-style-transfer) by [reiinakano](https://github.com/reiinakano)

LikeLike